3D Printing Developments Elevate Product Imagery

3D Printing Developments Elevate Product Imagery - High fidelity virtual models drive new image production

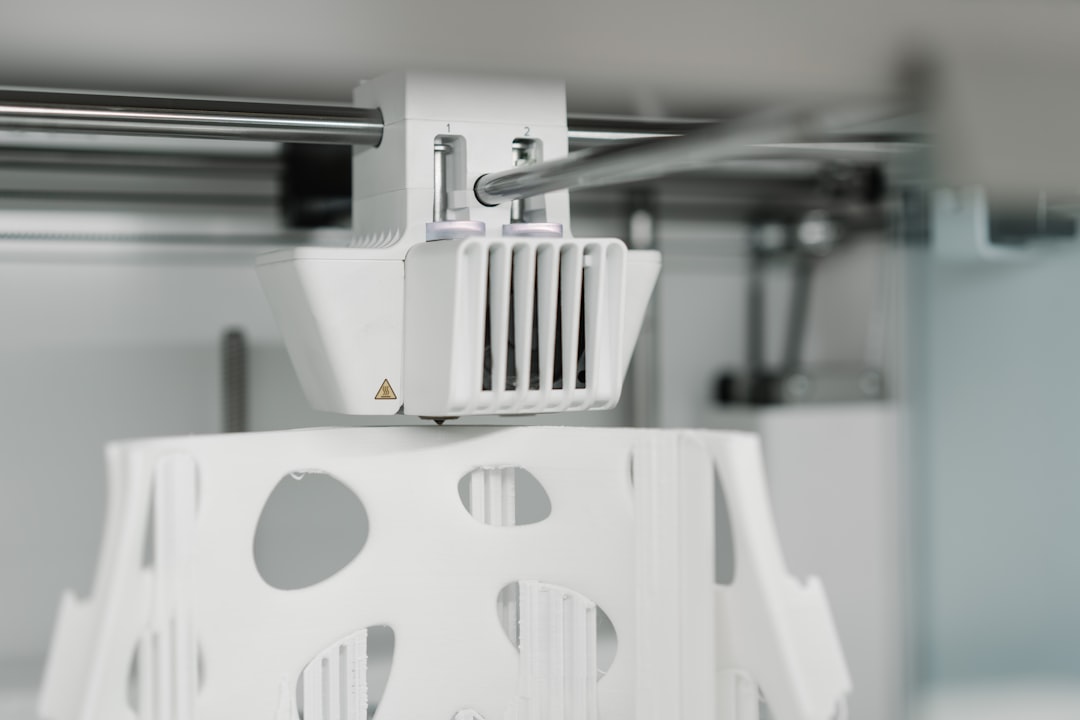

The landscape of product visual creation is undergoing a significant transformation, driven by the increasing sophistication of high-fidelity virtual models. These digital constructs are empowering online retailers to generate exceptionally realistic product depictions, often circumventing the necessity for traditional studio photography. Such virtual assets offer unparalleled detail and adaptability in showcasing items, allowing for custom presentations that can align with diverse consumer aesthetics and preferences. As artificial intelligence continues to refine its capabilities in image synthesis, the boundary between actual photographs and computer-generated visuals becomes increasingly indistinct, prompting crucial conversations around authenticity and the nuances of audience perception. This evolving dynamic, while undoubtedly enhancing the digital browsing journey, simultaneously challenges those presenting products to foster authentic engagement with their audience amidst an expanding domain of hyper-realistic, yet non-physical, imagery.

The underlying physics simulations within these advanced virtual models have become remarkably sophisticated. By meticulously mapping how light interacts with surfaces down to an almost microscopic level – accounting for everything from how it scatters just beneath the surface to how it reflects differently depending on the viewing angle – digital representations of materials can now rival their physical counterparts. From a technical standpoint, distinguishing a meticulously crafted digital fabric from its real-world twin under close scrutiny is becoming increasingly challenging, highlighting the impressive strides in rendering fidelity.

What once took hours of computation is now nearly instantaneous. Thanks to leaps in specialized processor architectures and the widespread adoption of real-time ray tracing techniques, generating complex, photorealistic product visuals is no longer a bottleneck. This speed fundamentally shifts the workflow: "virtual photography" can now be an interactive, exploratory process. Designers can tweak materials, lighting, or camera angles and see the finished result update almost instantly, enabling a truly rapid cycle of experimentation and refinement. This immediate feedback loop is powerful, though the sheer computational power required for such feats is often underestimated.

Moving beyond just the product itself, sophisticated generative artificial intelligence models are increasingly capable of constructing entire virtual environments around an item. They can conjure up anything from interior living spaces complete with furniture and decor to outdoor landscapes, intelligently placing the product within these generated scenes. While this automates a significant portion of what was previously manual scene composition – potentially offering an endless array of backdrops – a human touch is often still required to ensure the generated context truly aligns with the brand narrative or desired aesthetic. The 'intelligence' of such staging, while impressive, isn't always foolproof or nuanced.

Perhaps less intuitively, these detailed virtual models are proving invaluable as a source of 'synthetic data' for training other AI systems. By rendering products in various orientations, lighting conditions, and environments, millions of perfectly labeled images can be generated. This precisely controlled data can then be fed into algorithms for tasks like robotic object recognition in warehouses or advanced visual search on retail platforms, potentially speeding up AI development cycles considerably. However, while efficient, relying solely on synthetic data can sometimes introduce subtle biases or a lack of real-world noise, requiring careful validation against actual physical data.

Finally, there's the environmental aspect. By replacing traditional physical photoshoots – which often involve shipping prototypes, constructing temporary sets, and later disposing of materials – with virtual alternatives, there's a demonstrable reduction in the associated environmental impact. This shift can cut down on logistics-related emissions and material waste. While the computational energy demands of rendering are not negligible, the overall picture suggests a net positive contribution to sustainability within the product visualization workflow.

3D Printing Developments Elevate Product Imagery - Automated staging using synthetic environments

The core idea of automated staging using synthetic environments, particularly relevant for digital retail, is reaching a new level of sophistication. This involves systems not just creating virtual backgrounds but intelligently positioning and arranging products within them without significant human input. While this promises unprecedented speed and scale in creating diverse product displays, offering virtually endless contexts for an item, a critical look reveals certain limitations. The 'intelligence' driving these automated setups, while advanced, often struggles to grasp the subtle emotional or narrative nuances crucial to a brand's identity, sometimes resulting in visually polished but ultimately generic or disconnected presentations. This emerging capability pushes practitioners to weigh the efficiency gains against the potential loss of a truly distinctive and authentic visual voice.

The ability to craft a digital scene has moved beyond just placing objects; sophisticated algorithms are now analyzing vast quantities of observed user interaction. This allows these systems to not merely generate an environment, but to infer and construct compositions statistically most likely to resonate, essentially "learning" what elements or layouts drive a specific kind of engagement. There's a subtle but important shift from "making a scene" to "optimizing for impact."

Further along this path, some systems are integrating real-time insights from individual browsing behavior or inferred demographics. This means the digital backdrop for a product might subtly, or even dramatically, shift from one visitor to the next, aiming to create a highly personalized visual context. The ambition here is to move from broad segmentation to truly individual "virtual storefronts," though the technical and ethical implications of such pervasive customization warrant ongoing scrutiny.

One compelling engineering advantage stems from the perfect control afforded by a virtual setup. Unlike physical shoots where subtle variations in light, lens perspective, or even prop placement are inevitable, synthetic environments ensure pixel-perfect consistency across potentially millions of product variations and marketing deployments. This guarantees a monolithic brand aesthetic that would be prohibitively expensive, if not impossible, to achieve with traditional methods.

Intriguingly, the utility extends beyond just marketing visuals. We're seeing greater integration of these virtual staging capabilities directly into product development pipelines, particularly with Product Lifecycle Management (PLM) systems. Engineers can now drop early-stage digital designs into plausible real-world contexts, assessing usability or aesthetic fit long before any physical material is consumed. This "virtual prototyping within context" has the potential to dramatically compress design-to-market timelines, shifting design reviews to the digital realm.

Perhaps the most fascinating, and indeed, somewhat unsettling, development is the escalating fidelity of these generated backdrops. The quality has reached a point where distinguishing a sophisticated synthetic environment from a genuine photograph can become a non-trivial task for digital forensic tools. This isn't just about general audience perception anymore; it raises more profound questions about the future of image verification and the objective 'truth' presented in digital assets, prompting a need for new analytical methods.

3D Printing Developments Elevate Product Imagery - Streamlining image workflows for rapid deployment

The relentless push to accelerate image production for online retail continues to transform how goods are showcased. While the ability to quickly generate highly realistic visuals through advanced rendering and artificial intelligence has largely matured, the evolving challenge isn't just creating imagery, but effectively weaving this immense volume of diverse outputs into adaptable marketing efforts. The speed at which varied images can now be deployed brings both immense potential and a fresh set of organizational complexities. It requires a critical focus on maintaining a unified brand story across countless iterations, ensuring each visual piece genuinely connects with its audience, rather than simply adding to the digital noise.

The continuous drive for accelerated deployment in digital asset creation, particularly for showcasing products, keeps pushing the boundaries of what automated pipelines can achieve. We are witnessing intriguing developments that move beyond mere content generation to actively policing and enhancing the fidelity of the visual output itself, at scale.

One significant advancement lies in the automated quality control of these rendered visuals. Sophisticated algorithms are now capable of independently scrutinizing generated product images, meticulously hunting for rendering artifacts so minute they're imperceptible to the human eye, or even subtle geometric inconsistencies within the model. This eliminates a vast swathe of manual quality assurance, ensuring that every pixel is ostensibly perfect for immediate release, though the ultimate judgment on aesthetic intent versus an algorithmically deemed "imperfection" remains a curious philosophical edge case.

Beyond individual images, the frontier of procedural content generation is being heavily explored. Leveraging generative AI, systems can now take a single foundational product model and instantly generate entire catalogs. This includes hundreds of unique variations in material properties, color palettes, and even subtle modifications to the product’s form. This capacity drastically speeds up the visual manifestation of new product lines, though managing the sheer volume of output to maintain cohesive brand identity often becomes the new challenge.

Another fascinating, and somewhat unsettling, development involves neuro-adaptive rendering. Cutting-edge systems are beginning to incorporate algorithms that subtly adjust factors like the product's placement within a scene, the nuance of its illumination, or even the virtual camera’s perspective. These adjustments occur in real-time, informed by inferences about where a viewer's eye might naturally gravitate, aiming to optimize for subconscious visual engagement. It’s an impressive feat of predictive visual psychology, raising questions about the ethics of such subtly manipulative digital environments.

For the human operators still steering these increasingly autonomous workflows, the interface is evolving. Virtual photography setups are now integrating haptic feedback devices, allowing designers to experience a physical sensation akin to the subtle texture of a virtual material or the measured resistance when manipulating a digital light source. This offers a compelling bridge between purely digital interaction and a tactile, craftsman-like feel, although achieving truly convincing haptic fidelity for complex surface properties remains a substantial engineering hurdle.

Finally, the efficiency gains extend to the foundational 3D models themselves. Specialized AI modules are being deployed within image pipelines to automatically detect and rectify complex geometric imperfections or unoptimized mesh structures in incoming 3D product data. This proactive cleaning significantly reduces the need for manual asset preparation, ensuring that the rendering engine always receives clean, efficient data, thereby preventing delays. However, deeply intricate or highly stylized organic models often still demand a seasoned human hand for truly optimal results.

3D Printing Developments Elevate Product Imagery - Verifying visual accuracy in simulated environments

Ensuring visual fidelity in digital product representations is entering a complex new phase, particularly as retail experiences become ever more mediated by synthetic environments. With highly advanced generative AI now producing visuals at unprecedented scale, the challenge for accuracy has subtly shifted. It's no longer just about whether an image looks real, but whether it truly embodies the specific physical attributes and behaviors of a product under simulated conditions. This necessitates novel approaches to verification, moving beyond simple human perception to sophisticated automated checks for material consistency, lighting physics, and subtle AI-induced distortions that might be visually plausible but physically incorrect. As computational creativity reaches new heights, the evolving frontier of verification is to instill genuine confidence in an audience presented with hyper-realistic, yet non-existent, staging, while navigating the nuances of what 'accurate' truly means in a constructed digital reality.

While the rapid evolution of virtual models and generative AI has undeniably reshaped product visualization, ensuring the veracity of these synthetic assets presents a continuous and multifaceted engineering challenge. Here are some observations on how the field is addressing the critical task of verifying visual accuracy in simulated environments, as of mid-2025:

The precision afforded by virtual models is now being harnessed for advanced metrology. We’re seeing integrated digital metrology systems that can perform sub-micron level analyses directly on a product’s virtual twin. This isn't just about rendering; it's about programmatically inspecting a digital model's dimensions, tolerances, and surface finishes against engineering specifications with a rigor that, in some cases, exceeds the practical limitations of traditional physical inspection early in the design cycle. The crucial step remains: ensuring the digital representation itself is a faithful, verifiable ground truth for such precise measurements.

Beyond purely objective pixel comparisons, a fascinating area of research involves "perceptual validation networks." These AI models are trained not just on visual data, but on human behavioral insights like eye-tracking and interaction patterns. Their purpose is to predict whether a generated image not only appears realistic but also subtly conveys the intended product attributes and emotional resonance a human viewer would perceive. This moves beyond technical correctness to assessing the subjective fidelity of product presentation, a complex problem of bridging algorithmic generation with human psychology, which can sometimes highlight unintentional visual biases despite technical perfection.

With the escalating indistinguishability between sophisticated synthetic images and genuine photographs, the issue of provenance has become paramount. To counter this, cryptographic methods are increasingly being integrated directly into image generation pipelines. This involves embedding immutable metadata, akin to digital watermarks and blockchain-like timestamps, into generated product visuals. This allows for a verifiable audit trail, proving an image originated from an authenticated 3D model and passed through a specific, authorized rendering process. The technical infrastructure for this is maturing, but establishing universally accepted standards for digital image authentication remains an ongoing, complex discussion.

For applications demanding extremely high fidelity and dynamic accuracy, we’re observing the deployment of closed-loop verification systems. This involves instrumented physical "proxies" – highly accurate replicas or material samples – placed in real-world environments, continuously capturing precise data on light interaction, reflections, and environmental conditions. This real-time empirical data is then used to dynamically calibrate the virtual rendering engine. This feedback loop aims to ensure that the simulated product's visual behavior precisely mirrors its physical counterpart under diverse and changing real-world scenarios, a robust but computationally intensive approach to grounding virtual accuracy in empirical reality.

Finally, the nuances of color accuracy are driving a move towards spectral rendering for verification. Rather than merely processing light in red, green, and blue channels, these advanced pipelines simulate how materials interact with light across the entire visible electromagnetic spectrum. This physically-based approach provides a far more accurate representation of true color, accounting for subtle light scattering and absorption properties. The goal is to ensure that generated product colors remain consistent and accurate across a multitude of display technologies and viewing conditions, precisely adhering to standardized color spaces, a challenge that reveals how easily conventional RGB workflows can introduce subtle but significant distortions.

More Posts from lionvaplus.com:

- →Make Your Products Irresistible with Professional Photography

- →Transform Your Product Photography with AI Your 2025 Business Playbook

- →Bring Hogwarts Magic to Life A 3D Printed Sorting Hat Lantern

- →Beyond DSLR Mirrorless Cameras and AI Elevate Product Images

- →The Reality of AI in Creating Ecommerce Product Images

- →Fact Checking AI Product Imagery Affordability and Results