Product Visuals AI Reality Versus Industry Expectations

Product Visuals AI Reality Versus Industry Expectations - AI's Visual Triumphs and Tribulations for Online Products

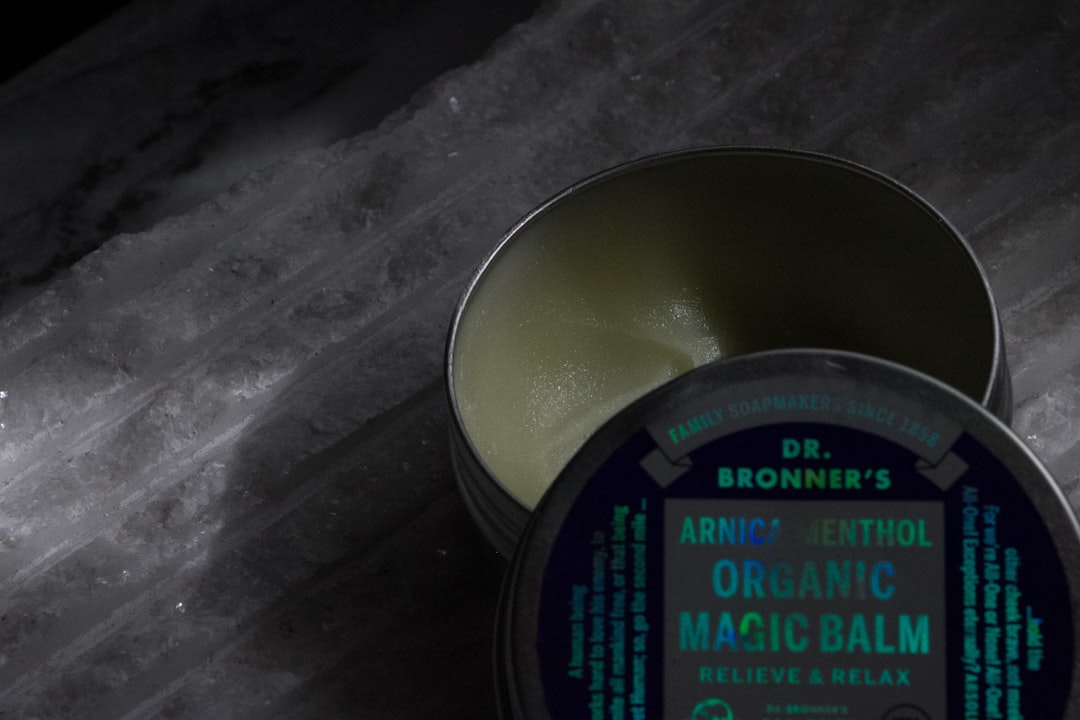

AI's sophisticated advancements in visual generation have indeed transformed the digital canvas of online product imagery, presenting a duality of remarkable successes and stubborn challenges. Though AI image generators can now produce visually arresting scenes that easily capture immediate attention, they frequently falter when it comes to embodying true authenticity and the intricate nuances that deeply connect with a buyer's real-world expectations. Even AI-driven product staging, while undoubtedly enhancing the visual journey, often struggles to fully recreate the tactile presence and natural ambiance inherently offered by physical products. As e-commerce continuously adapts, the ongoing push and pull between leveraging AI's immense capabilities and the crucial need to maintain genuine, relatable visuals remains a central debate for entities striving to truly engage their market.

The immediate creation of diverse scene settings for a product, capable of being tailored to an individual's browsing history or preferences, has noticeably increased how connected a shopper feels to an item, translating into more completed purchases. The sheer scale of unique visual narratives AI can spin for a single product is quite remarkable.

Even with AI's impressive ability to render highly realistic images, slight visual glitches—a shadow falling incorrectly or an odd surface texture—can sometimes induce a disquieting sense, often dubbed the "uncanny valley." This minute dissonance can subtly undermine a viewer's confidence in what they're seeing, potentially making them hesitate before buying. It's a fascinating perceptual challenge.

A less-discussed consequence of relying on these sophisticated AI models for creating countless product visuals is the substantial energy expenditure involved. Both the initial intensive training of these vast generative networks and their ongoing operational demands contribute to a surprisingly large, often overlooked, energy footprint in the digital product staging process. It's an environmental cost we're only beginning to fully appreciate.

As of mid-2025, it's becoming quite common for advanced computer vision algorithms to scrutinize product imagery on e-commerce sites. These systems are being deployed by both marketplaces and retailers to flag minute alterations or misleading visual cues in listings. They act as an important automated safeguard against less scrupulous visual claims, an interesting feedback loop where AI helps moderate AI's output.

While AI is certainly capable of producing incredibly lifelike product renders, observed trends suggest that reaching an extreme level of visual perfection doesn't always correlate with increased viewer interest. In fact, an image that appears too flawless or clinically pristine can sometimes feel detached from reality, potentially making it less relatable or trustworthy to a shopper. There seems to be an optimal point beyond which further refinement yields little, or even negative, return in genuine connection.

Product Visuals AI Reality Versus Industry Expectations - Where AI Still Misses the Mark in Product Staging Dreams

While AI continues to push the boundaries of visual creation, its application in product staging still encounters significant hurdles that extend beyond mere surface imperfections. The ability for these sophisticated systems to truly grasp and consistently convey the nuanced, often unspoken, essence of a brand's unique identity remains elusive. Generating scenes that resonate deeply across diverse cultural landscapes, free from unintended biases or misinterpretations, also proves to be a consistent challenge. Moreover, when it comes to illustrating products within highly specific, functional contexts or showcasing complex interactions, AI models frequently demonstrate an unsettling lack of real-world logic, resulting in scenarios that simply don't ring true.

As of mid-2025, while AI’s visual capabilities are undeniably impressive, certain nuanced aspects of product staging continue to elude fully autonomous generation.

One persistent challenge researchers observe is AI's struggle with accurately simulating the intricate physics of light interacting with various material properties. Surfaces like gossamer fabrics, translucent objects, or those with complex subsurface scattering often reveal AI's limitations, as the generated images, under close inspection, don't quite capture the natural sheen, depth, or absorption characteristics that our visual system expects. It's a subtle but significant deviation from physical reality.

Furthermore, conveying a sense of implied motion or dynamic interaction remains largely elusive for current AI models. The natural cascade of a textile in motion, the subtle shimmer of liquid as it's poured, or the way a product might subtly distort its environment in movement—these dynamic elements are often stiff or absent, suggesting AI still grapples with simulating temporal changes and continuous physical processes within a static visual.

While AI can certainly produce a vast array of settings, a notable barrier lies in its ability to consistently grasp and apply a brand's unique subjective aesthetic or emotional tone. What makes a visual 'feel' a certain way, resonating deeply with specific audience sentiments, often necessitates an intuitive human touch. Current systems frequently default to generic but polished visuals, rather than consistently achieving that nuanced, brand-specific 'voice' without substantial post-generation human intervention.

Similarly, the challenge of embedding genuine cultural context and specific demographic relevance into AI-generated product visuals is still quite pronounced. Automated staging can easily miss the subtle cues, symbolism, or lifestyle indicators vital for authentic connection with niche or diverse international audiences. This can result in visuals that, while technically proficient, lack the deeper, culturally informed narrative that truly resonates.

Finally, the aspiration for truly physically accurate light transport simulations and complex material rendering across diverse staging scenarios demands immense computational horsepower. In practice, this often means current AI models must employ various approximations or simplified rendering techniques to achieve reasonable generation times. Under expert scrutiny, these shortcuts can sometimes lead to deviations from ground truth realism, revealing that the balance between visual fidelity and practical computational limits is still a critical engineering hurdle.

Product Visuals AI Reality Versus Industry Expectations - The Unseen Human Touch Behind Automated Product Glamour

Having explored the dual nature of AI's performance in product visuals—from its remarkable abilities to generate vast quantities of imagery, to its persistent struggles with genuine authenticity, physical realism, and nuanced emotional resonance—this next discussion pivots. It shifts attention from the technical intricacies and impressive, yet often imperfect, outputs of machine learning, to an often-overlooked yet critical element: the irreplaceable human touch. This section will delve into why, even amidst an increasingly automated landscape, human creativity, discernment, and strategic intent remain paramount for imbuing product visuals with the depth, context, and persuasive power that truly connect with an audience, moving beyond mere visual polish to genuine engagement.

The apparent self-generated polish of AI-produced product imagery doesn't arise from a vacuum. Instead, it's rooted in colossal datasets, diligently compiled by human hands, which inherently carry human tastes, biases, and visual norms. This implies that the AI's very concept of what looks "good" or "desirable" is largely a mirror reflecting the aesthetic choices embedded during its extensive training phases. It's a fascinating look into the echo chamber of digital aesthetics.

Directing sophisticated generative AI systems to yield very specific and stylistically coherent product visuals remains a highly specialized human endeavor. It calls for expert "prompt engineers" who master the art of nuanced linguistic commands and iterative refinements. This human-led feedback loop is crucial in steering the AI's immense capabilities towards fulfilling precise creative and visual objectives, demonstrating that fine-tuned control is far from automated.

Even with the sheer volume of images AI can churn out, maintaining a consistent brand identity across thousands of AI-generated product visuals, encompassing both visual style and underlying emotional resonance, invariably demands substantial human art direction and meticulous post-production work. This ongoing human intervention acts as the crucial guardian, ensuring that automated outputs align seamlessly with an overarching brand story and established visual principles, preventing visual drift.

It's an overlooked detail that many leading-edge AI systems for product visualization don't actually invent the product's underlying shape or structure. Instead, they operate by digitally 'staging' and enhancing existing 3D models or Computer-Aided Design (CAD) files, which are themselves the meticulous creations of human industrial designers. This fundamental dependency highlights that the digital "perfect form" of a product's visual journey almost always starts with human ingenuity.

While AI can indeed generate images that simulate light interactions with impressive physical accuracy, human visual preference often leans towards subtly art-directed or aesthetically refined lighting and shadows in product visuals. This isn't about physical correctness, but emotional impact. It suggests our appreciation often prioritizes artistic interpretation over strict scientific rendering, a subtle but critical distinction that these AI systems, by their nature, don't inherently grasp without specific human guidance or training on such preferences.

Product Visuals AI Reality Versus Industry Expectations - Beyond the Hype Practical AI Adoption in E-commerce Visuals

Beyond the initial excitement surrounding AI's raw image generation power, the discussion around its practical application in e-commerce visuals is shifting. As of mid-2025, the focus is increasingly on integrating these tools seamlessly into daily operations, optimizing visuals not just for aesthetic appeal but for tangible business outcomes like reduced returns and improved conversion rates. This deeper dive into practical adoption reveals that the challenge is less about what AI can create, and more about how effectively it can be woven into complex digital pipelines to consistently deliver meaningful value, demanding a more strategic, and often critical, approach to its implementation within the product lifecycle.

Here are up to 5 surprising observations about practical AI adoption in e-commerce visuals as of 16 July 2025:

Intriguingly, certain advanced AI systems now go beyond static image generation, deploying visuals that dynamically adjust to individual viewer segments based on real-time interaction data. This allows for a continuous, autonomous refinement of what's displayed, effectively tailoring visual context to perceived audience preferences, aiming to maximize viewer retention or interaction. The underlying feedback mechanisms are quite sophisticated.

An evolving capability involves AI models sifting through enormous volumes of global visual data, not just to generate, but to discern patterns in visual aesthetics and consumer leanings. This analytical layer aims to forecast shifts in desired visual styles, potentially influencing the very design language of future generated imagery, rather than merely reacting to existing trends. One wonders about the potential for aesthetic monoculture here.

A fascinating application gaining traction is the generation of highly detailed product visuals from rudimentary inputs like engineering schematics or early 3D models, well before physical prototypes exist. This means a product's appearance can be 'simulated' and visually explored months ahead of its actual manufacturing, fundamentally altering traditional product development and previewing pipelines. The leap from conceptual data to photorealistic render is quite compelling, though maintaining accuracy can be a hurdle.

We're observing systems being trained on datasets that incorporate aspects of neuroaesthetics, enabling them to manipulate subtle visual elements—such as color intensity, framing, or focal depth—with the purported aim of eliciting specific emotional or attentional states from a viewer. It’s an intriguing step, moving from purely realistic representation to a more deliberate, and potentially manipulative, influence on perception, though the actual efficacy and ethical considerations are still subjects of active discussion.

An interesting evolution in training methodologies is the increasing reliance on synthetically generated imagery for populating the massive datasets used by AI product staging models, moving away from exclusive dependence on photographic captures. This approach offers engineers highly granular control over variables like surface textures, light angles, and environmental characteristics, and hypothetically, a way to mitigate some of the inherent biases present in real-world image collections. However, the quality and diversity of these synthetic environments still present considerable challenges, and one must consider whether new, perhaps less obvious, biases might emerge from the generation process itself.

More Posts from lionvaplus.com:

- →Unveiling the Power of Generative AI on AWS NVIDIA GTC 2024 Unlocks New Frontiers

- →Amazon Bedrock's Metadata Filtering A Game-Changer for AI-Driven Product Image Generation

- →AI-Generated Product Images Bridging the Gap Between Digital Art and E-Commerce

- →AI-Enhanced Product Image Generation Leveraging Amazon SageMaker's Parallel Libraries for Scalable E-commerce Visuals

- →7 Ways Neural Arithmetic Logic Units are Revolutionizing AI-Powered Product Image Generation

- →The Hidden Power of Deep Q-Networks in AI Product Image Generation